If you read on the same sentence Flow and Test, what do you think is the article about? My first thought was “how to test a flow in order to avoid issues.” Something like this one.

However, my entry would like to focus on Test Coverage as it is a key point that could be a pain if you need to deploy Flows in active mode. The reason, they also require to have a 75% of coverage at least, in order to get a success deploy.

Flow Test Coverage? I thought this is a #clicksnotcode tool …

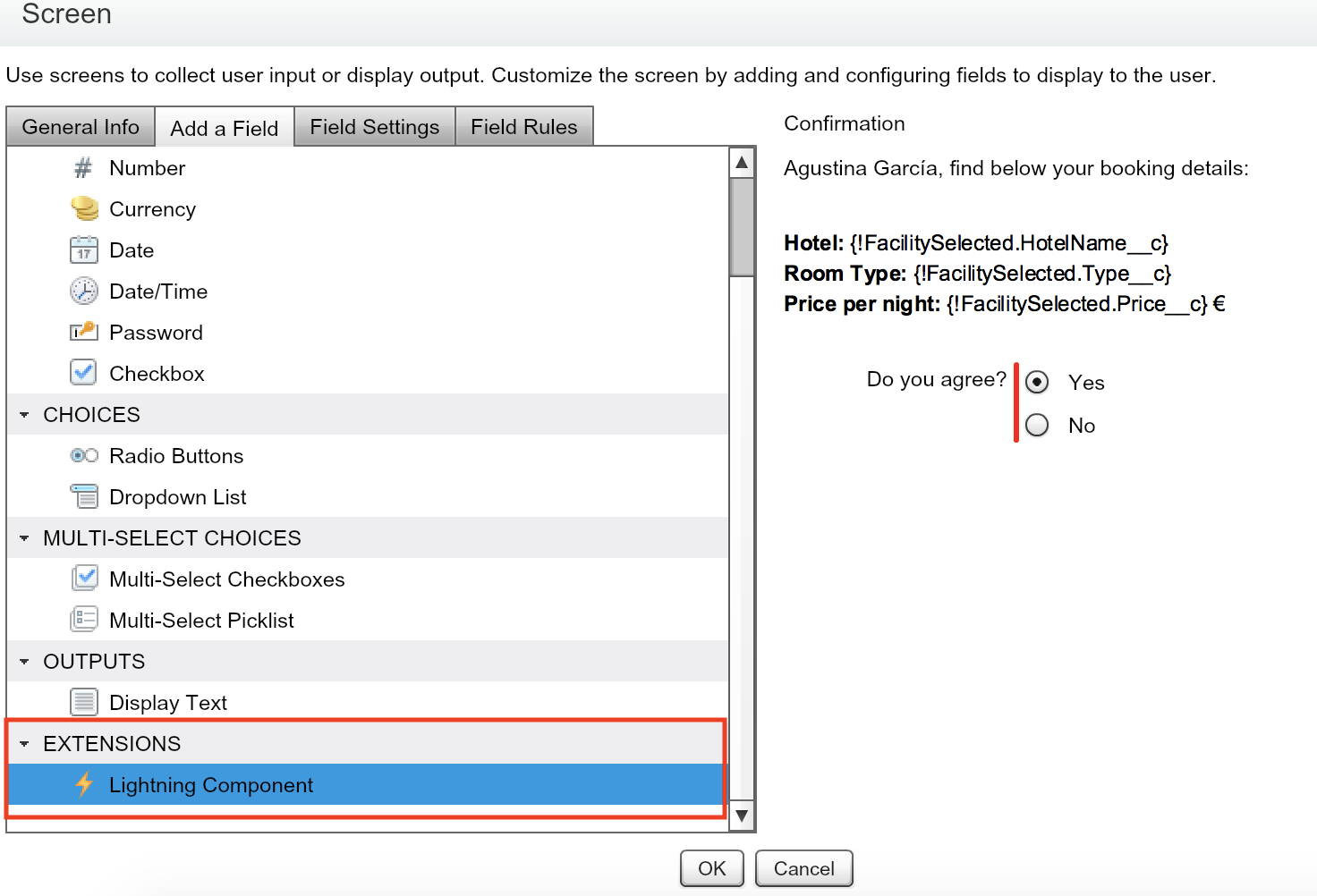

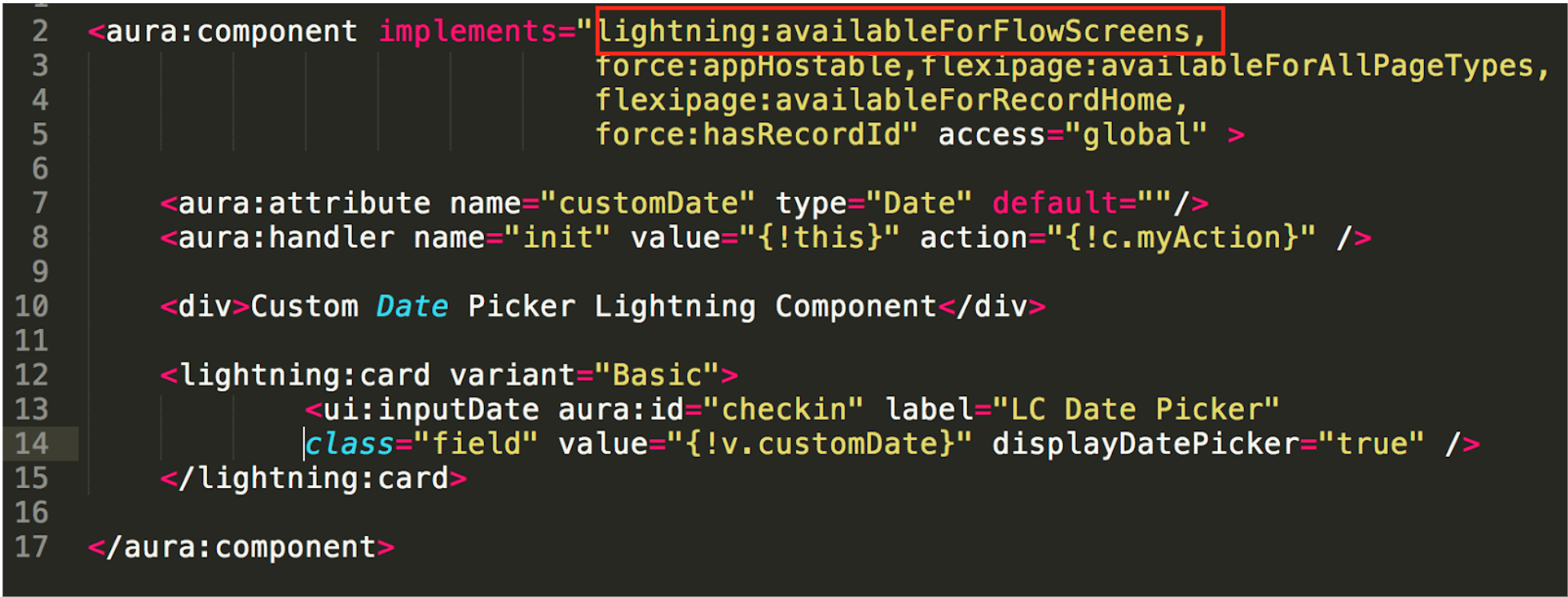

Yes and it may not make sense if you are working with Screen Flow. In fact, we do not need to worry about test coverage on these kind of flows. However, you need to keep in mind those that work without screens such as autolaunched flows. You can have more information here.

And how can we do it? Like the documentation says, via a Trigger.

Let’s try to explain it with an example.

If you have read some others entries, you will already know my Flow examples are based on a Hotel Reservation App. So below example is related to Reservations. The scope of the autolaunched flow is to send an email to the guest once he pays the reservation.

And in order to make it work, I need to call it from my Trigger.

trigger ReservationTest on Reservation__c (before insert, before update)

{

for(Reservation__c r : Trigger.new)

{

if(r.isPaid__c)

{

System.debug('Run the email flow');

Map<String, Object> params = new Map<String, Object>();

params.put('guestEmail', r.guestEmail__c);

Flow.Interview.EmailFlow flowToSendEmail = new Flow.Interview.EmailFlow(params);

flowToSendEmail.start();

}

}

}Now, that we have the new flow, we need to check Flow Coverage status. But the first execution is to identify all Active elements we have in the org in order to be able to calculate the coverage.

Running this query:

Select MasterLabel,

Definition.DeveloperName,

VersionNumber,

Processtype

FROM Flow

WHERE Status = 'Active'

AND (ProcessType = 'AutolaunchedFlow'

OR ProcessType = 'Workflow'

OR ProcessType = 'CustomEvent'

OR ProcessType = 'InvocableProcess')We get below result, and we can identify 2 Autolaunched flow. One of them is the EmailFlow, the one we are using on this example.

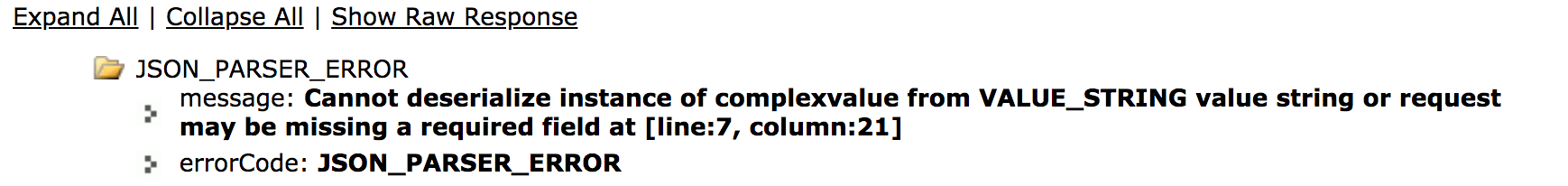

On the other side, Salesforce provides an object in order to check coverage, FlowTestCoverage and we will use it in order to check EmailFlow one. However it only returns a record if there is already some coverage of the elements, so the easiest way to start checking it is modify previous query adding as a filter where the Id not in on the new FlowTestCoverage object.

Select MasterLabel,

Definition.DeveloperName,

VersionNumber,

Processtype

FROM Flow

WHERE Status = 'Active'

AND (ProcessType = 'AutolaunchedFlow'

OR ProcessType = 'Workflow'

OR ProcessType = 'CustomEvent'

OR ProcessType = 'InvocableProcess')

AND Id NOT IN (SELECT FlowVersionId FROM FlowTestCoverage)The query result is the same as before so we can start writing our first test in order to cover EmailFlow one.

@isTest

public class EmailFlowApexTest

{

static testMethod void validateEmailFlow()

{

Reservation__c r = new Reservation__c();

r.isPaid__c = true;

r.guestEmail__c = 'a@ff.com';

insert r;

List<Reservation__c> rList = [Select Id, isPaid__c, guestEmail__c from Reservation__c where Id = :r.Id];

System.assert(true, rList.get(0).isPaid__c);

}

}Now, this test covers the trigger that starts EmailFlow, so if we run previous query again, now, EmailFlow will not appear as part of the result, and running a query against FlowTestCoverage, we can start getting some information about our job.

SELECT Id,

ApexTestClassId,

TestMethodName,

FlowVersionId,

NumElementsCovered,

NumElementsNotCovered

FROM FlowTestCoverage

Where we can see how match the FlowVersionId with ours, and also the TestMethodName we defined above, validateEmailFlow.

Now I wonder: What about if our test has more than one step? What does happen with above result? So let’s go for it. After the email is sent, we will have a decision element that will check the total amount of the reservation. After that, do an assignment to a certain object. We could continue adding more complex to the flow but let’s keep it simple so far.

The first step is to modify the test in order to have an amount higher or less than the decision filter.

@isTest

public class EmailFlowApexTest

{

static testMethod void validateEmailFlow()

{

Reservation__c r = new Reservation__c ();

r.isPaid__c = true;

r.guestEmail__c = 'agarcia@financialforce.com';

r.TotalAmount__c = 100;

insert r;

List<Reservation__c> rList = [Select Id, isPaid__c, guestEmail__c from Reservation__c where Id = :r.Id];

System.assert(true, rList.get(0).isPaid__c);

}

}With this, if we run below query,

SELECT Id,

ApexTestClassId,

TestMethodName,

FlowVersionId,

NumElementsCovered,

NumElementsNotCovered

FROM FlowTestCoverageWe get a similar result as the previous one. The only difference is that NumElementsCovered is 3 while NumElementsNotCovered is 1 . The reason is because now, our flow has 4 elements, the core one that sends the email, the decision and the 2 assignments. But our test only covers one of the assignments.

So next step is to add a second method that covers the second decision path.

@isTest

public class EmailFlowApexTest

{

static testMethod void validateEmailFlow()

{

Reservation__c r = new Reservation__c ();

r.isPaid__c = true;

r.guestEmail__c = 'agarcia@financialforce.com';

r.TotalAmount__c = 100;

insert r;

List<Reservation__c> rList = [Select Id, isPaid__c, guestEmail__c from Reservation__c where Id = :r.Id];

System.assert(true, rList.get(0).isPaid__c);

}

static testMethod void validateEmailFlowWithAmountLessThan50()

{

Reservation__c r = new Reservation__c();

r.isPaid__c = true;

r.guestEmail__c = 'agarcia@financialforce.com';

r.TotalAmount__c = 25;

insert r;

List<Reservation__c> rList = [Select Id, isPaid__c, guestEmail__c from Reservation__c where Id = :r.Id];

System.assert(true, rList.get(0).isPaid__c);

}

}And this time, the query execution returns below result where we can see the second testMethod.

Also we can check both have same values on NumElementsCovered and NumElementsNotCovered because each one cover a single path.

Note: Whenever I made a modification on my trigger, the query took a while (even a day) to get the results. It’s like Salesforce needed some time to process new test coverages.

Finally I would like to talk about how we know we achieve the 75% required in order to be able to deploy my flow. Unfortunately Salesforce does not provide the information yet but explain a way to calculate it manually.

For example, if you have 10 active autolaunched flows and processes, and 8 of them have test coverage, your org’s flow test coverage is 80%.

Salesforce help: https://sforce.co/2PqALZw

Having that, if I only have a single flow in my org with some test coverage, per above info, my flow coverage is 100%. However I would not be surprised if they look into the test flow and check the number of elements. So if I have 4 elements and 2 are covered, my coverage is 50% and if I cover 3, I achieve the 75% required. Keep this in mind just in case.

Update 20190726

While I was writing this post, I found out some weird behaviours with tests. If I removed a test method that covered a flow and run the query again, results were same as if I had the test in place. Because of that, I decided to raise a case with Salesforce in order to know more, and I would really like to share the final answer with you.

When we first run a test class it will add a record for each method to the FlowTestCoverage object. If we modify the class to add a new method and run it again, then, it will add a new record for the new method. But if we remove a method it will not delete the record. The record will only be removed if a change is made to the associated flow version.

If we delete the test class completely it will remove all FlowTestCoverage records for the related class since it no longer exists.

If you would like the FlowTestCoverage object to reflect changes made to test classes then, the best way to do this would be to create a new version of the flow and run the test classes against the new version.

Hope it helps others.

What can we highlight?

What can we highlight?